OpusClip AI Video Creation

Based on the existing AI video clipping tool, I was responsible for adding new features that empower users to create AI-generated videos from multiple video sources or text prompts.

Time

3 months

(01.2024 - 04.2024)

Tools

Scope

Ideation | UI/UX Design | Usability Testing | Iterations

Achievements

Subscription conversion rate: + 6.2%

Monthly active users: +8.3%

Context

My Contribution

Led the design process from research to iteration.

Tested the solution with 6 participants and collected feedback.

How do users create videos by OpusClip?

When users create videos, they are usually excited at the beginning with ideas in mind, however, the process is more and more painful along with creating videos.

Pain points:

I have ideas but taking videos is too much trouble

Multiple videos are hard to edit, and users want to compare results.

Users get new raw videos and want to use them together with old videos.

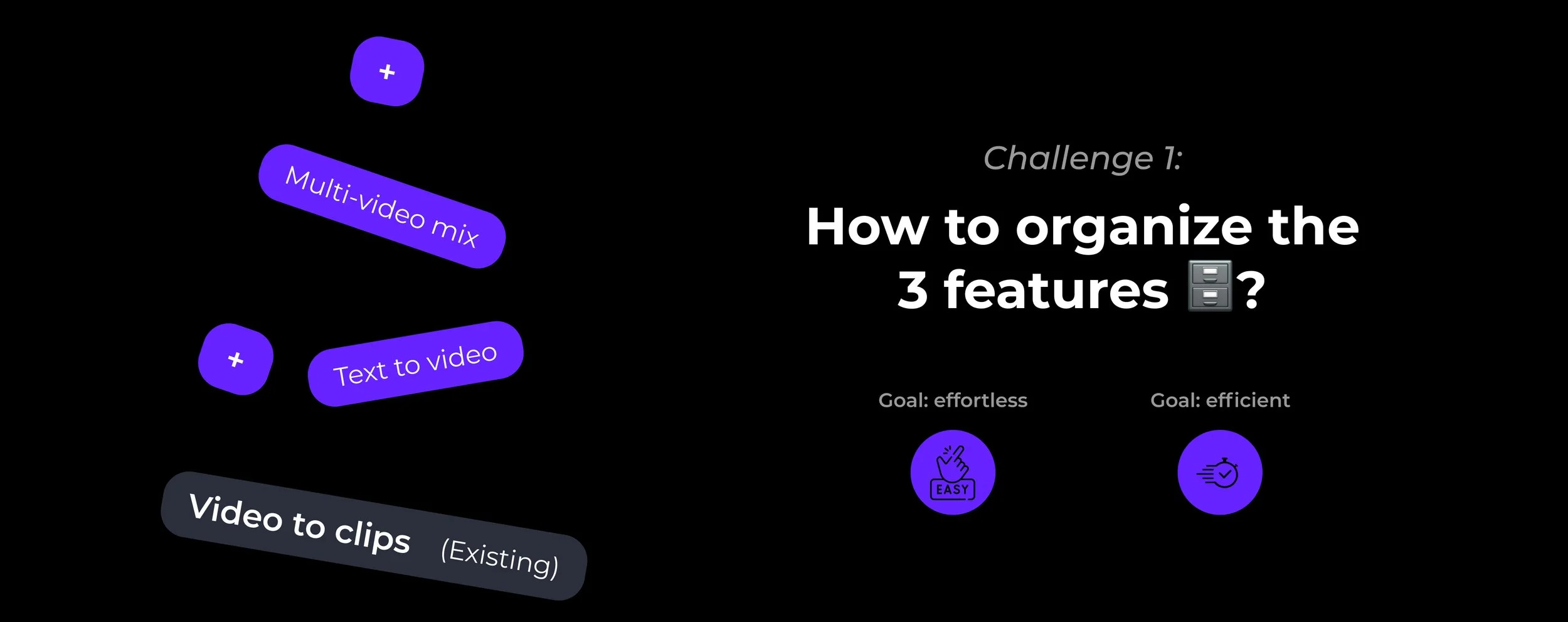

Feature Requirements

In the existing website, users get AI-generated clips from an uploaded long video.

With the pain points defined in the previous step, new features need to be added to the existing website.

The features include: Text to video/ AI video mix/ Video library.

In the following case study, I will walk through the first 2 features.

How to improve the experience for business users?

In the existing website, users get AI-generated clips from an uploaded long video.

Users simply upload a long video, and then adjust some settings. The algorithm will automatically select the most viral pieces of the long video, add subtitles, and score short videos based on their viral level.

How did I design the features?

This case study shows how I approached the problem , designed solutions, and tested the end results. Keep scrolling to read the process!

Prototype

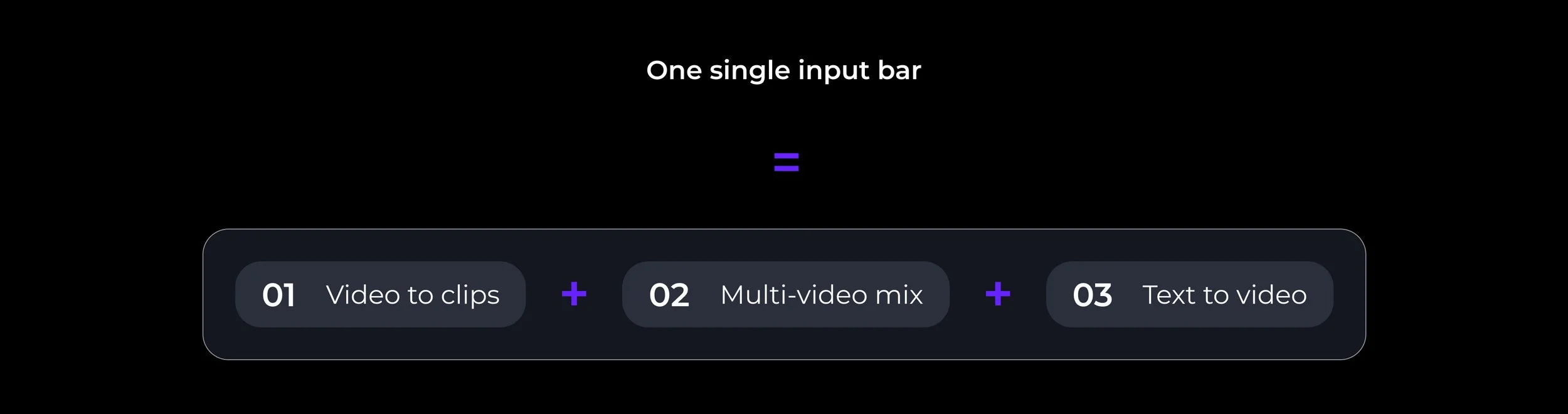

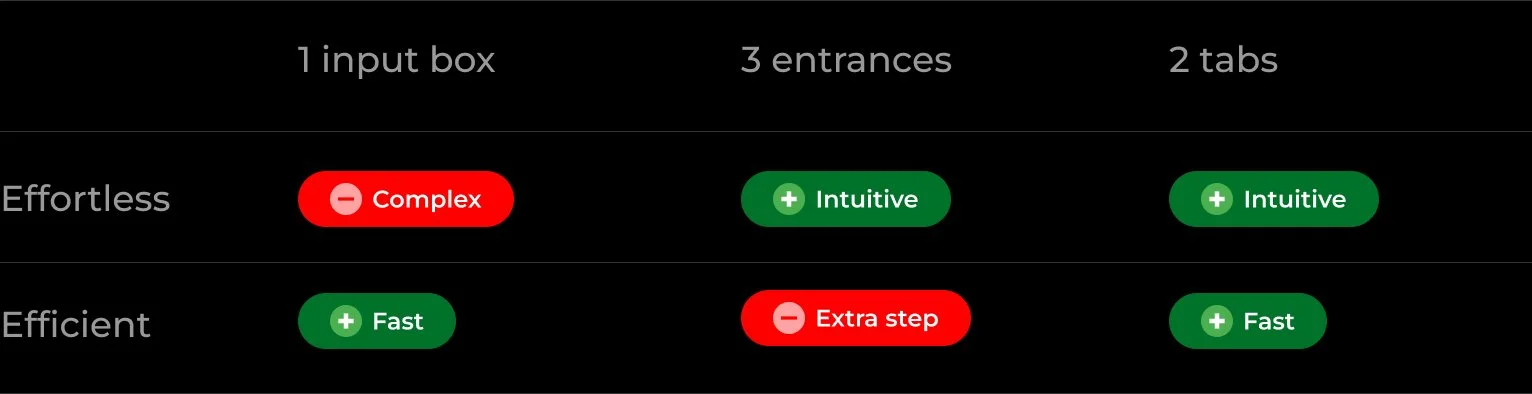

At first, I imagined a robust input box that could merge all functionalities. Users can choose to input video, text, or both. Based on the input, AI-generated content will be presented.

However, this interface requires multi-task and brings confusion to users.

Design Solution 1: Merged Functionalities

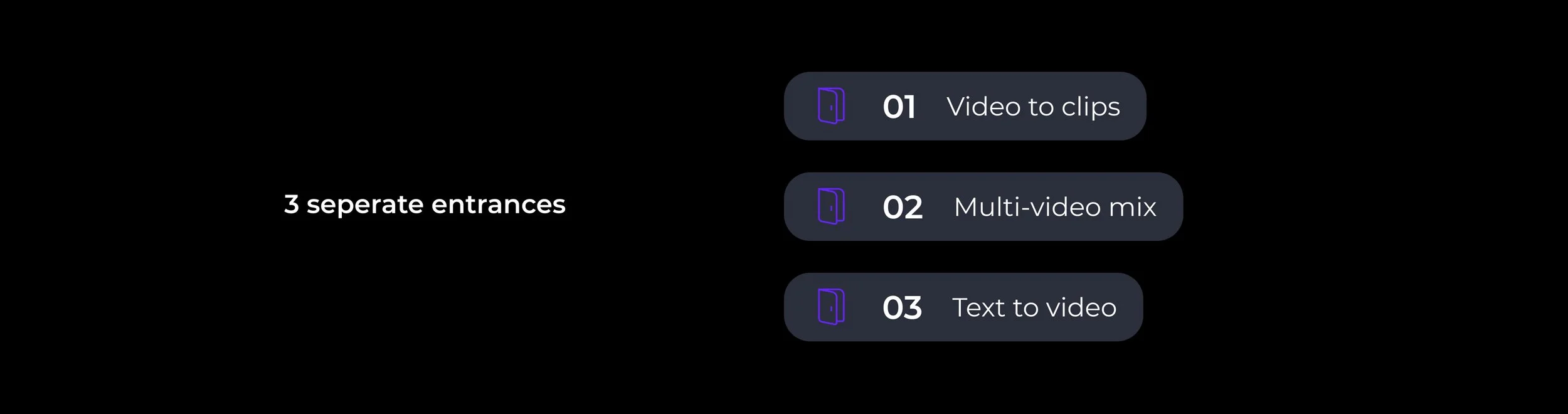

Later, I explored a clearer approach that introduces each feature as an independent entrance. With this approach, users were super clear about the information architecture.

However, all users will spend an extra step on this interface.

Design Solution 2: Separated Entrances

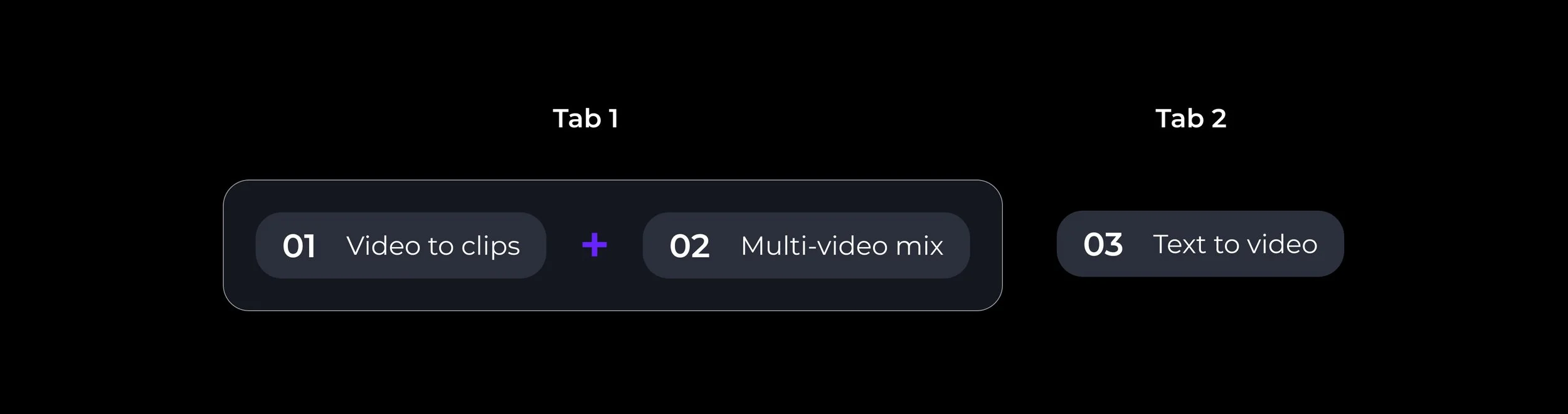

After iteration, the alternative has 2 tabs on the homepage. “Video to clips” and “multi-video mix” were combined in the first tab, while “text-to-video” stands by itself.

Final Solution: 2 Tabs

Pros and Cons Comparison:

both effortless & efficient

Comparing the 3 options, the final solution is both intuitive and efficient for users. There is no additional step for users. And there is only one thing to focus on in each step.

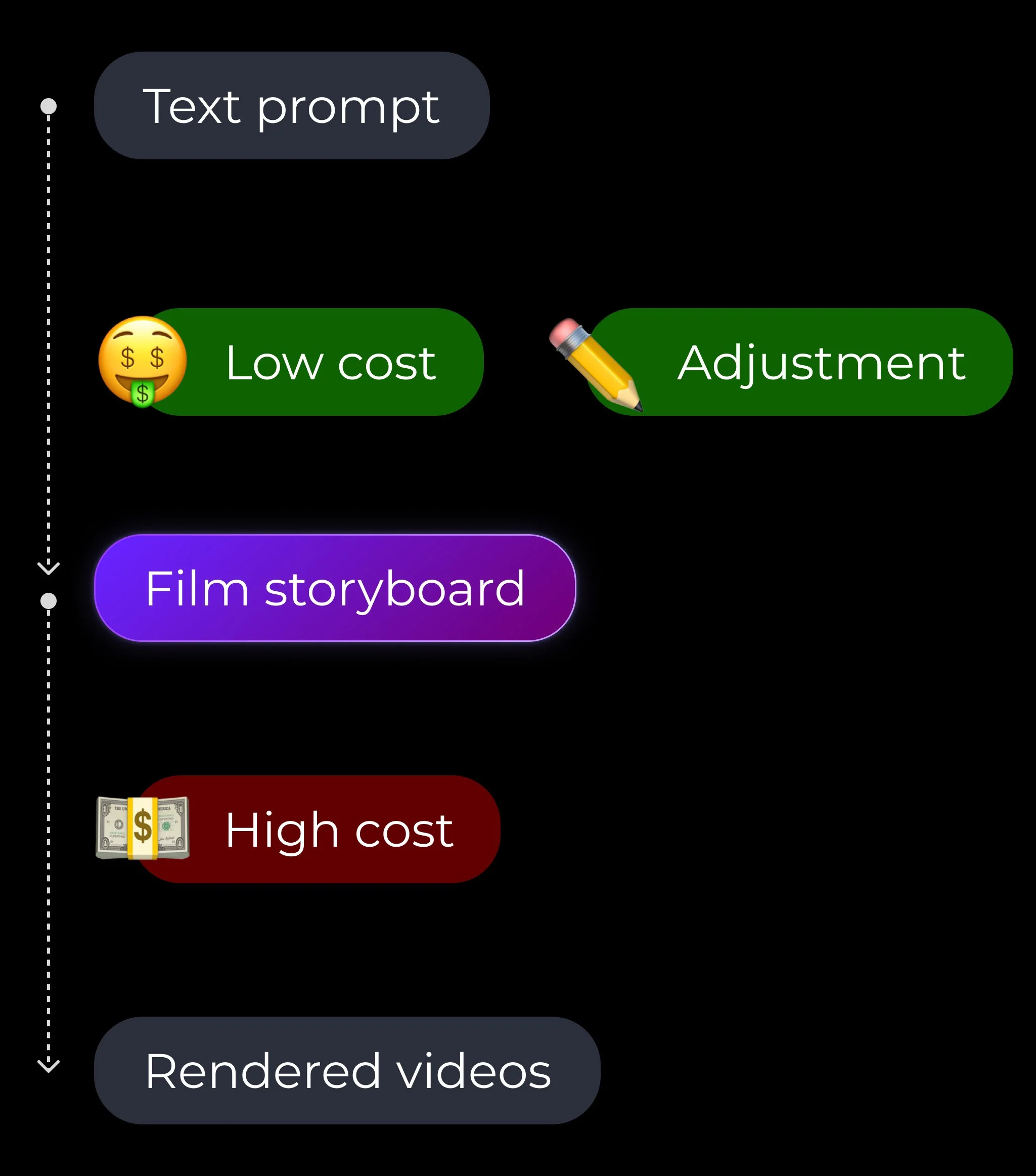

Text prompt, settings, video result

Design iteration 1

I designed a user flow that allows users to input text prompts, adjust video settings, preview the results, and select the right ones to post. Users can select from the existing prompt library or generate a random prompt in the first step. And when checking the video results, users can also tweak them before posting to allow flexibility.

Problems:

Expensive process + Frustrated users

Constraints:

- Video generation is expensive

- VUsers need enough preview

As a result, a middle step is needed for the video generation user flow to decrease the cost and satisfy users.

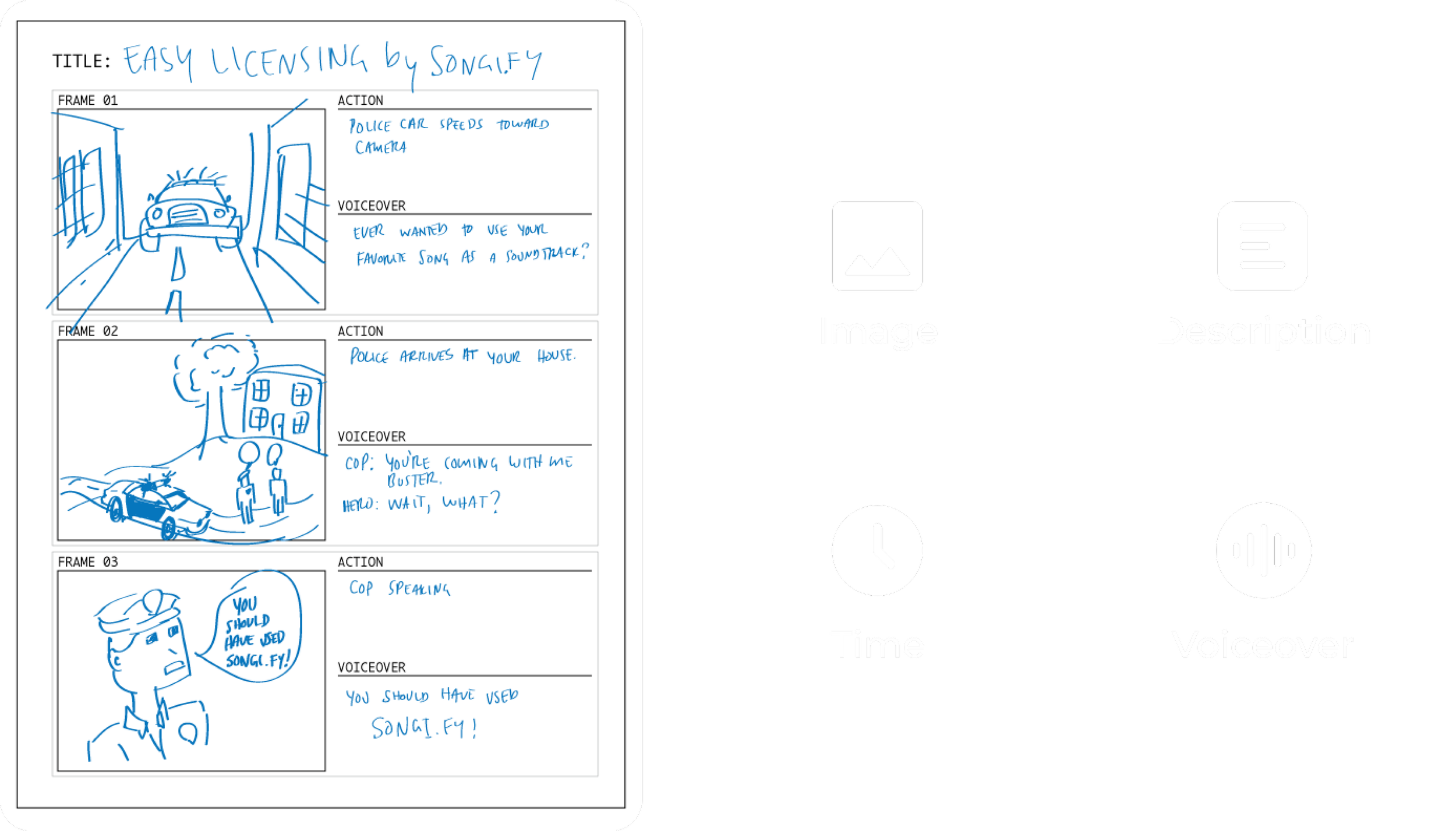

Design Inspiration

Film storyboard

To give users more control of the video result, and to save the budget in video generation, I referred to the film storyboard as the middle step of the video generation process.

Image and text generation is much cheaper than video generation, in this case, users can adjust the static storyboard and select the satisfying videos to render. The storyboard includes image, description, time and voiceover content for users’ reference.

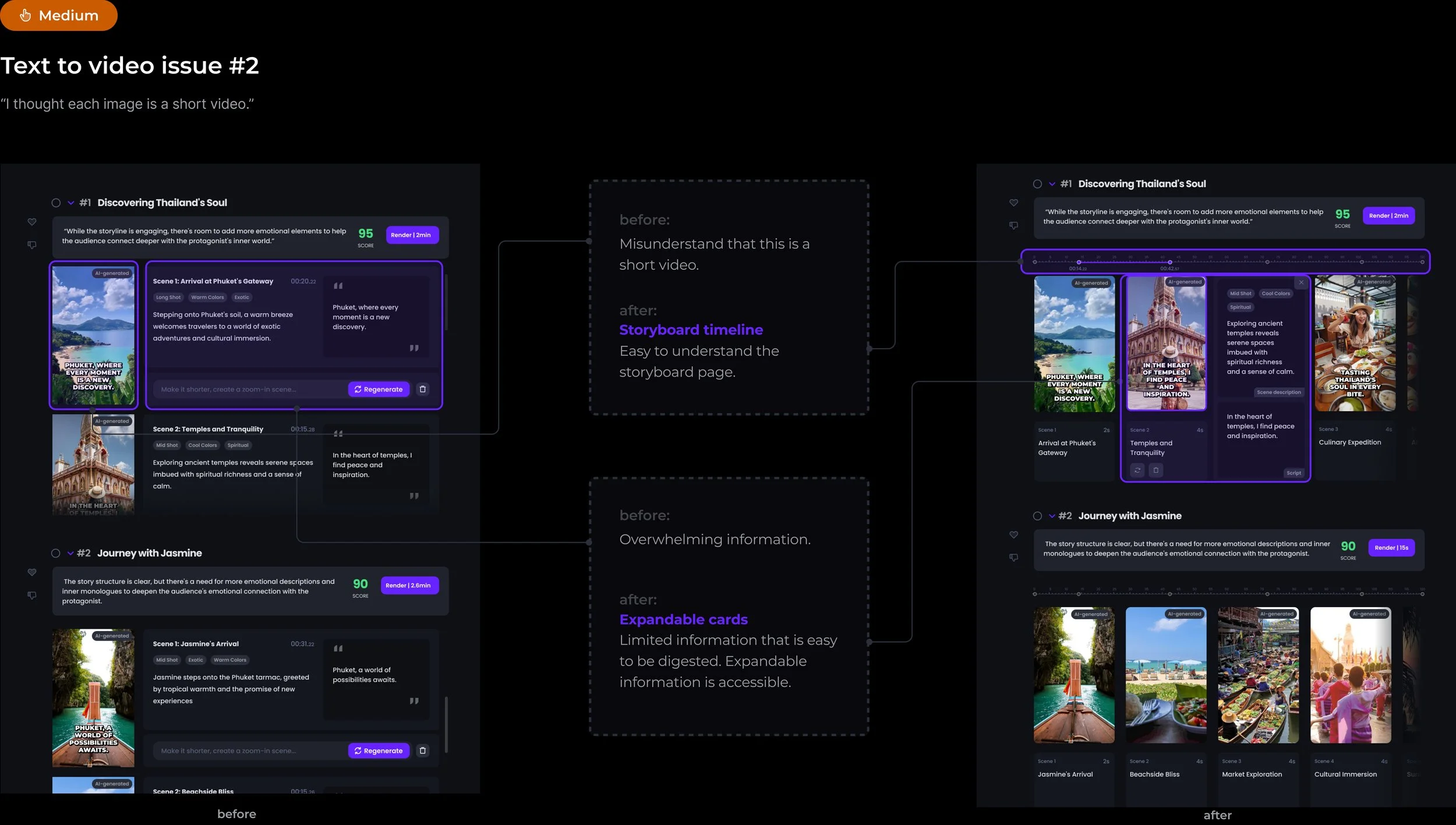

In the iterated version, I added a film storyboard that includes descriptive information for each scene.

Users can get a sense of the video by glancing at the storyboard. They are also allowed to add, delete, or regenerate some scenes before rendering the final result.

Film Storyboard as middle step

I tested the prototype with 6 participants that are video editors, and collected their feedback about the prototype. The test includes both “text to video” and “video mix” features.

Time to test it out!

Test

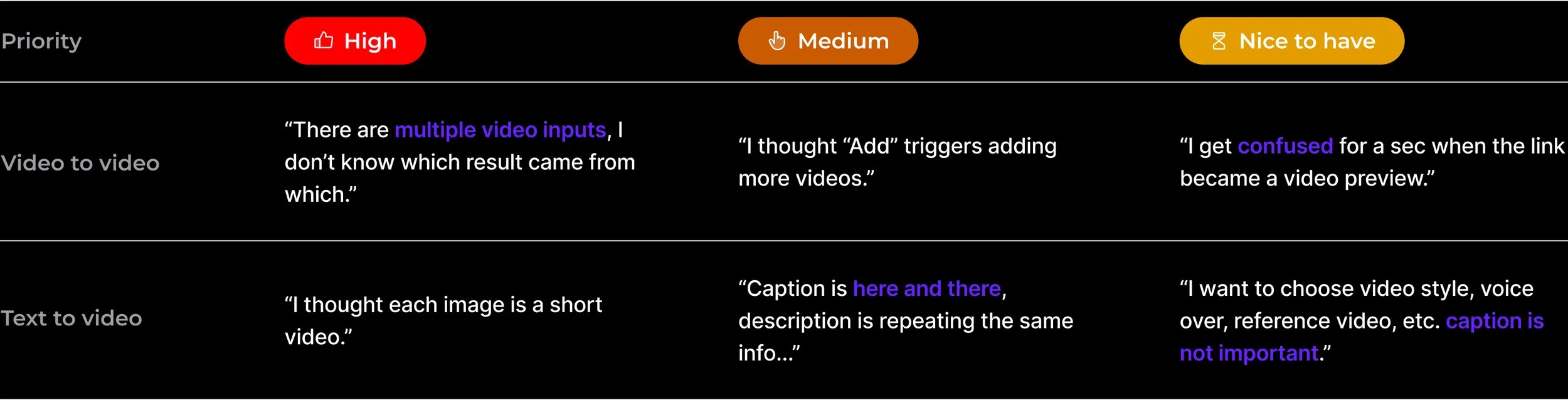

After chatting with 6 participants, I collected feedback about the overall IA and the 2 features.

The general feedback about the prototype was positive. However, participants were confused about some features that need to be explored.

Prioritize the issues with the team and focus on the “High” & “Medium” issues.

Participants brought up a lot of useful feedback and also insights about potential new features during testing. However, there are limited resources and the team needs to ensure maximum outcome from limited efforts. “Nice to have” features were saved for future explorations.

Iterate

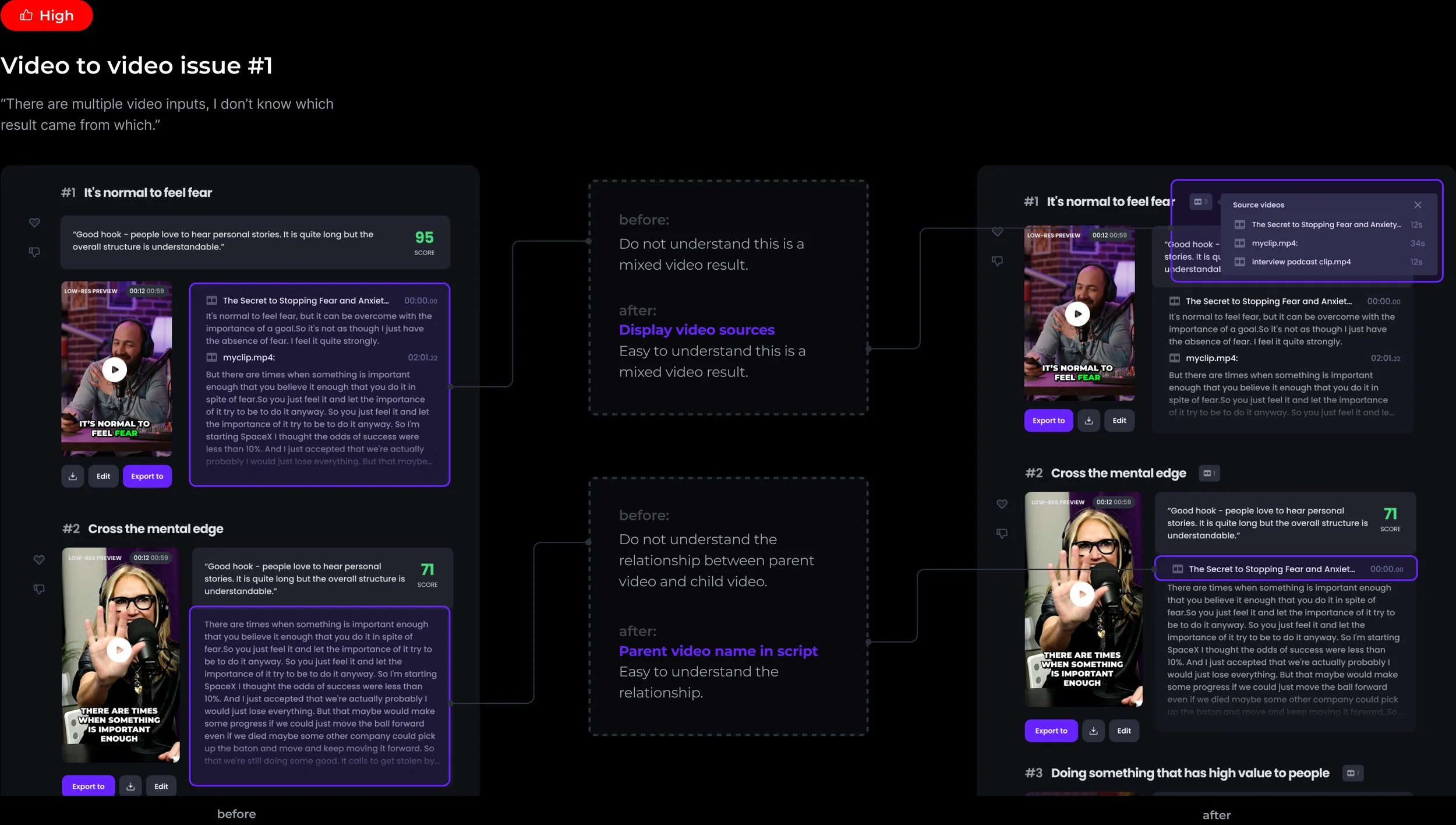

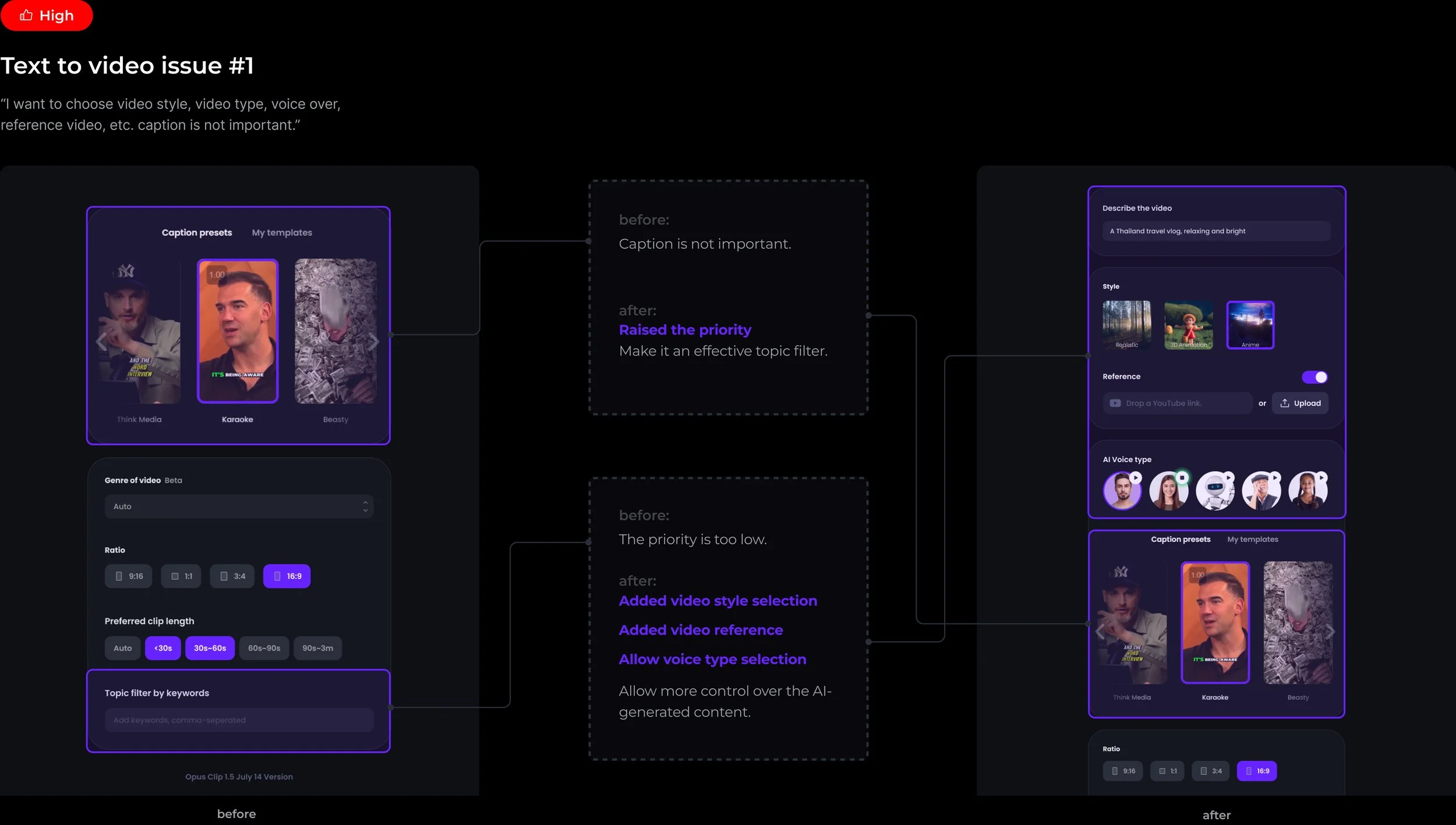

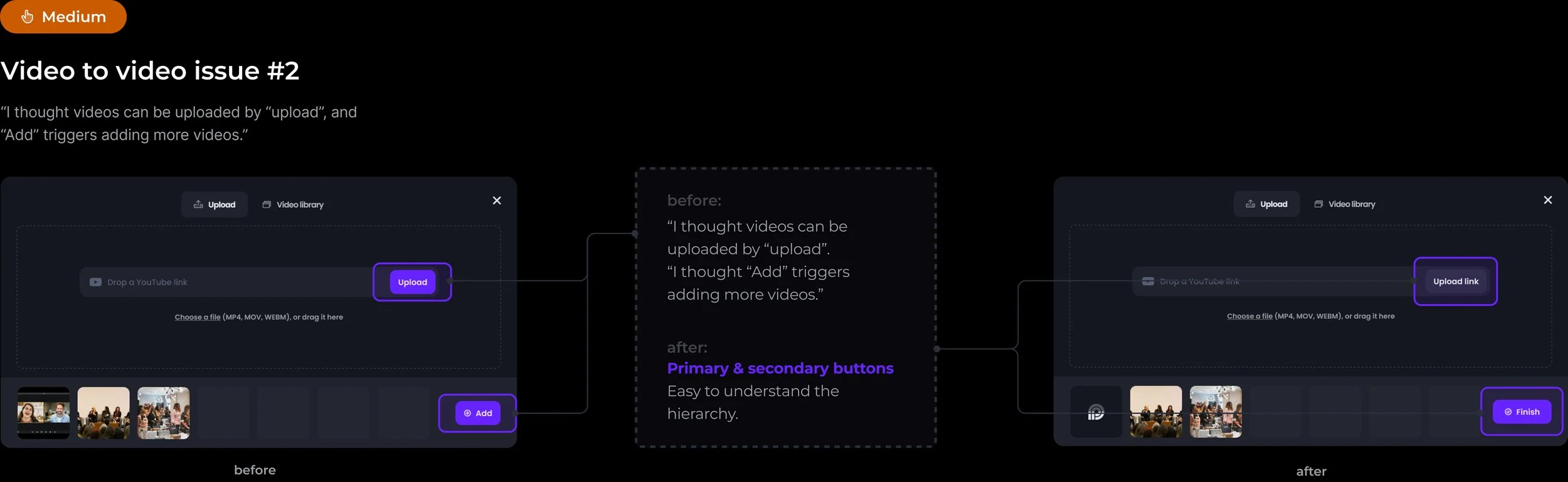

The 4 issues were addressed to simplify the user flow and lower the cognitive load for users.

The team selected 2 issues for each feature to iterate.

/Video to video: the end result is confusing, especially since users were not sure about the relationship between parent videos and child videos. And the upload pop-up window contains overwhelming information.

/Text to video: the storyboard confused users a lot and at the same time, the presetting content did not make a lot of sense for users.

Success Metrics

Outcome

The iteration created a more intuitive and user-friendly experience, which was measured by several factors, including rating, subscription conversion rate, MAU, and the average video creation time.